Introduction

Llama 3 70B is a large language model (LLM) made available as open-source by Meta, and has been thoroughly tested to detect and address potential performance limitations and mitigate potentially offensive or inappropriate responses in conversational settings.

Step by Step - How to use Llama 3 70B on AiSudo

Open AiSudo App

- Click "+ New Session"

- Click "Chat with AI"

- On the "AI Model" dropdown, Select "Llama 3 70B"

- Select your language and preset (if needed)

- Click "Create Session"

Model Specifications

| Spec | Value |

|---|---|

| Model Name | llama3-70b-8192 |

| Model Release Date | April 18, 2024. |

| Developer | Meta |

| Parameter Size | 70 Billions |

| Model Card | meta-llama/Meta-Llama-3-70B-Instruct |

| Context Window | 8,192 tokens |

| Input | Text only |

| OUtput | Text only |

| Language | Multi Language |

Available Setting Parameters

This is the list of available setting parameters on AiSudo app for this model:

- System Instruction

- Max History: 16

- Max Tokens: 4096

- Temperature: 0.0 to 2.0

The list might be expanded with additional parameters in the future.

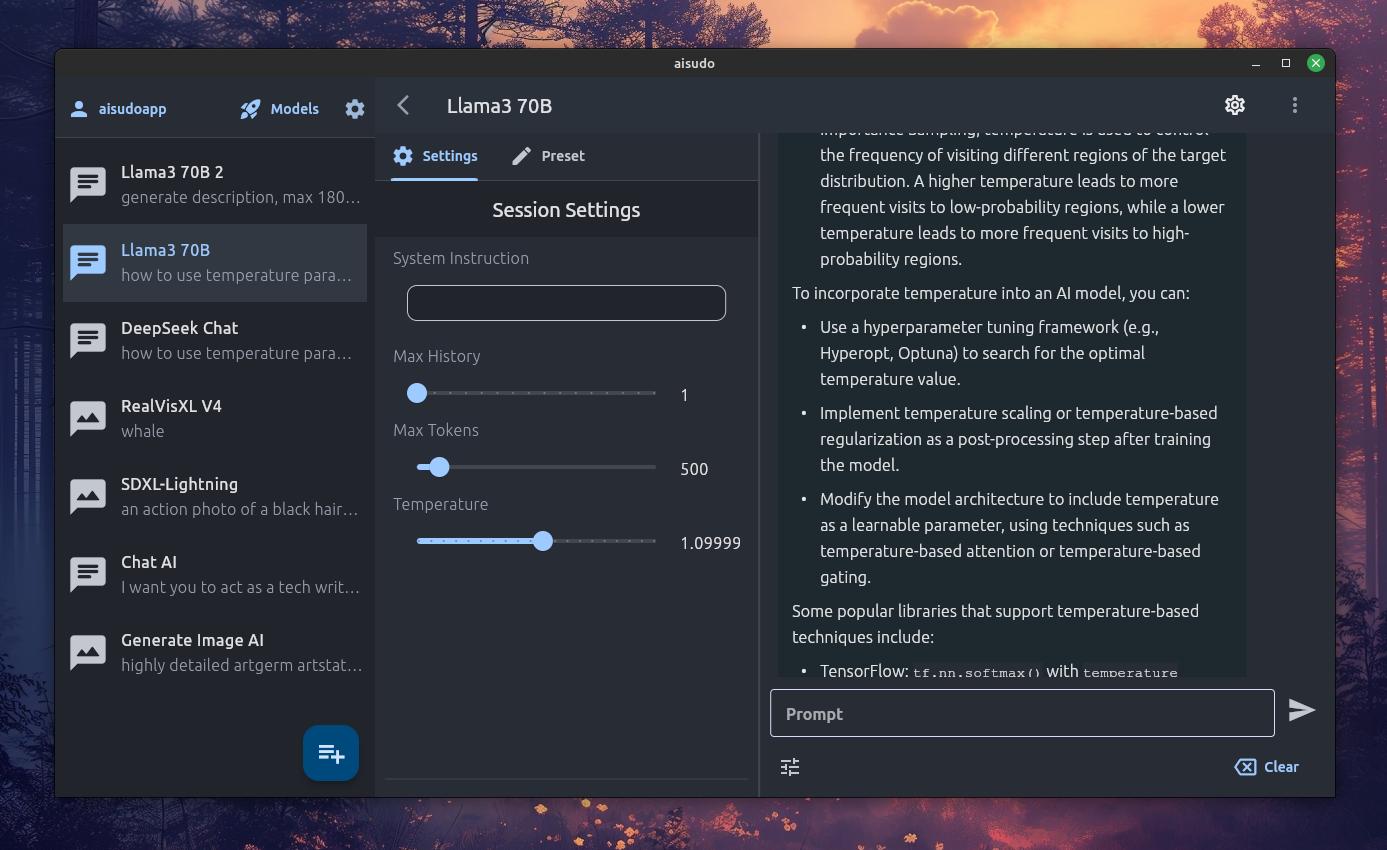

Sceenshot

The image above shows a successful connection to Llama on AiSudo. Use this as a reference to confirm that your configuration is correct.

The image above shows a successful connection to Llama on AiSudo. Use this as a reference to confirm that your configuration is correct.

Sample Dialogue Between a Person and Meta Llama 3 70B

The following is examples of conversation generated using Meta Llama 3 70B. The examples is tested with following configurations:

| Spec | Value |

|---|---|

| Temperature | 1 |

| Max Tokens | 128 |

| Max History | 1 |

| System Instruction | make it easy to understand by general audience |

Example 1

User: what is an ai model?

Llama 3 70B:

An AI model is a programmed set of instructions that enables a computer to perform tasks that typically require human-like intelligence, such as understanding language, recognizing images, or making predictions. Think of it like a recipe for your computer to follow, but instead of baking a cake, it's solving problems or making decisions.

At its core, an AI model is a mathematical representation of a problem or a process. It's made up of algorithms, data, and rules that work together to enable a computer to learn from experience, adapt to new situations, and make decisions or take actions accordingly. For instance, an AI model might be trained to recognize objects in images, generate text, or predict

Example 2

User: how to use temperature parameter in Llama 3 70B

Llama 3 70B:

In LLaMA 3 70B, the temperature parameter is a numerical value that controls the randomness or uncertainty of the model's output. It's called "temperature" because it's similar to the concept of temperature in thermodynamics, where higher temperatures mean more energy and randomness.

In the context of language models, the temperature controls how likely the model is to produce novel or unexpected responses.

How does the temperature parameter work?

Here's how the temperature parameter affects the model's output:

Low temperature (e.g., 0.1) : The model will generate more predictable and conservative responses. It will stick to the most likely or "safest" answers, which might be repetitive or boring.

High temperature (e.g., 1.0 or higher): The model will generate more diverse and creative responses. It will take more risks and produce more unexpected or novel answers, which might be more interesting but also more prone to errors.

Medium temperature (e.g., 0.5): The model will generate responses that balance predictability and creativity. It's a good starting point for most use cases.

Example 3

User: what is max tokens in Llama 3 70B?

Llama 3 70B:

In the context of AI models like LLaMA, "tokens" refer to individual units of text, such as words, punctuation, or special characters.

The "max tokens" limit in LLaMA 3 70B (a large language model) refers to the maximum number of tokens that can be processed or generated by the model in a single input or response.

To put this into perspective, a token is roughly equivalent to a word or a character. So, 2048 tokens would translate to:

* Around 500-750 words (depending on the word length) * A few paragraphs of text

Try Llama 3 70B on AiSudo Today

The AiSudo App has taken a major leap forward with the integration of Llama 3 70B models. This cutting-edge technology is now available in most countries, boasting a robust multi-AI technology framework.